A Noob's Guide to GPT-3: First Impressions

Once upon a time, I wanted to be a writer. In the fourth grade, I wrote an article about how you could catch a fish by wrapping a string around a peanut butter sandwich and then throwing it in the water. I remember my teacher, Mrs. Thaler, laughing and saying, “That’s the most ridiculous thing I’ve ever heard!” I guess she wasn’t impressed with my writing skills.

Can you believe a computer wrote that entire passage? 😲

This week I had a chance to experiment with OpenAI’s API which is still in private beta. There’s been a lot of buzz on Twitter about their new GPT-3 language model and its ability to generate eerily human-like writing so I was excited to try it out.

As someone with only basic knowledge of AI and machine learning, I oscillated between feelings of wonder and terror at some of GPT-3’s output. Yet with this API, its capabilities can be harnessed by developers to power radically new kinds of user experiences in the near future.

For this reason, it’s worth understanding what GPT-3 actually is, how it was trained, and how it works under the hood. In this post I’ll be sharing my notes on the technology.

What exactly is GPT-3?

GPT-3 is a language model developed by OpenAI that aims to predict the next word in a body of text, using the preceding words for context. It is powered by a neural network and its predictions are based on weight parameters that indicate the probability of one word fragment appearing after another.

These parameters were calculated by training the model using a massive dataset of text sourced from the internet (Common Crawl), two libraries of books, and English-language Wikipedia. As a result, GPT-3 has 175 billion parameters – orders of magnitude larger than its predecessor, GPT-2, which was released last year and had only 1.5 billion parameters.

This scale is what sets GPT-3 apart from other language models and enables it to generate highly coherent, grammatically correct, and often surprisingly readable text.

Using GPT-3 for the first time

OpenAI has not released the actual GPT-3 model due the potential for deliberate misuse and issues of bias that are still being addressed. Instead, the model is accessed through an API that can be called from any web application.

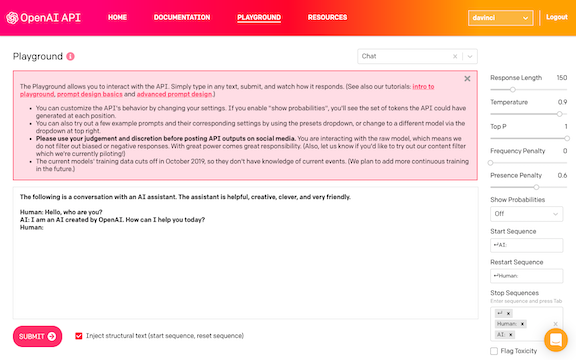

There is also playground tool that allows you to interact with the API online.

From the API documentation:

To use the API, you simply give it a text prompt […] and it will return a text completion, attempting to match the context or pattern you gave it. You can “program” it by crafting a description or writing just a few examples of what you’d like it to do. Its success generally varies depending on how complex the task is.

My initial prompts yielded text completions that often read like a wacky Mad Libs story. But as my prompts improved, so did GPT-3’s output. The completions weren’t always perfect and some cherry picking was needed to produce a good output.

I was eventually able to generate a mock speech about an alien invasion by an imaginary President Snoop Dogg:

Ladies and gentlemen, I’m here today to inform you that aliens have invaded our country. It’s real. I seen it with my own eyes. It’s as real as Snoop Dogg. I was chillin’ in my crib, smokin’ the chronic, when I heard a sound like a million Mack trucks had just crashed into my house. I looked out my window and seen a giant mothership. I grabbed my gat and went outside. That’s when I seen the aliens. They were short and green and ugly as hell. I thought to myself, ‘Damn, I need to get my camera and record this shit.’ I ran back in the house and grabbed my camcorder, but when I went back outside, they was gone. But I know they was here. I seen their footprints in the grass. And that ain’t all I seen. I also seen them abducting people. They took one of my homeboys, Rufus. I tried to stop them, but they zapped me with some kinda ray gun and put me in some kind of trance. I woke up three days later on my living room floor. I went outside and seen a bunch of green alien shit everywhere. I think they’re trying to take over our planet.

What’s next? [1]

As an early-stage technology, it’s important to understand that GPT-3 is still imperfect and prone to errors. This is why the API is still in private beta and not yet available to the public.

It also raises all kinds of questions about the future of AI and machine learning, and how it will impact our society. If this kind of technology is widely available to the public, then will we have to update our existing laws about computer-generated speech?

I’m excited about GPT-3’s potential, and I’m looking forward to seeing what developers will be able to build with it. I’ve only scratched the surface of its capabilities and there are a lot of interesting things to discover.

Notes

[1] This section was entirely generated using GPT-3!

To learn more, check out the GPT-3 paper “Language Models are Few-Shot Learners” and the OpenAI blog.

For more frequent updates, follow @blythe_field on Twitter.